Let’s be honest: bringing AI into your business is about solving real problems, not just chasing the latest tech trend. A successful rollout comes down to a clear strategy, and that strategy has to start with managing how your custom software applications—both desktop and mobile—talk to AI models. Too many leaders jump straight to the sexy algorithms and end up with a high-tech toy that doesn’t move the needle.

The real secret? It's all about the plumbing. Getting the communication layer right between your app and the AI is the difference between a game-changing success and an expensive headache. This is where most projects go off the rails, turning into a messy tangle of hard-coded prompts, surprise API bills, and zero version control. That's why we're so passionate about this at Wonderment Apps. We've seen this movie before, and we built the tool to fix the ending. Our prompt management system is designed to plug right into your existing software, giving you an administrative control panel to modernize your app for AI integration. Stick around, and we'll show you how getting this foundation right makes everything else fall into place.

Your AI Implementation Playbook

Thinking about how to weave AI into your business can feel like a massive undertaking, but it doesn't have to be. The journey begins with a practical, strategic mindset—not with getting lost in complex algorithms.

Too many leaders jump straight to the technology, completely overlooking the foundational pieces that will make or break the project. They end up with impressive but ultimately useless tools that don’t solve a single core business challenge.

The real starting point is a simple shift in perspective: AI is a powerful extension of your existing software, not a total replacement for it. For your mobile and desktop apps to get "smart," they need to communicate with AI models effectively. And it’s this communication layer where most projects turn into a real mess.

The Hidden Challenge of AI Integration

Imagine trying to manage hundreds of conversations at once without any notes or records. That’s exactly what it’s like when your software interacts with AI models without a proper system in place.

This chaos usually shows up in three key areas:

- Disorganized Prompts: Developers often hard-code instructions (prompts) directly into the application. When an AI model's response isn't quite right, they have to dig back into the code to tweak the prompt. This creates a slow, painful cycle of trial and error.

- Runaway Costs: API calls to powerful models like GPT-4 or Gemini cost money. Without a central way to monitor who is using what and how often, these costs can spiral out of control, making it impossible to calculate a clear return on your investment.

- Versioning Nightmares: As you refine your prompts to get better results, you’ll naturally create different versions. Without a "prompt vault" or some kind of version control, you can easily lose track of which prompt works best, leading to inconsistent performance and a ton of wasted effort.

This is exactly why a centralized prompt management system, like the administrative tool from Wonderment Apps, becomes a game-changer. It gives you a control panel for your entire AI operation, turning a disorderly process into a well-oiled machine.

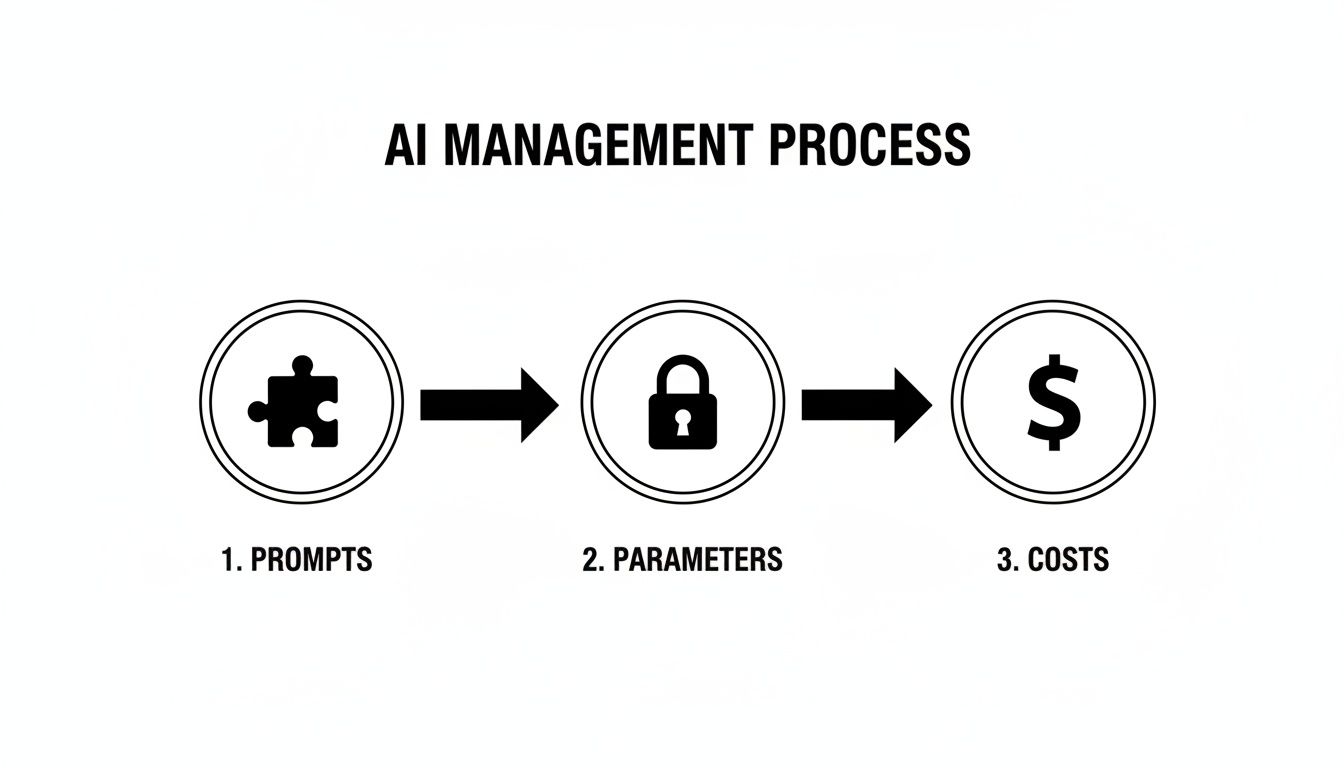

This flow chart breaks down the core components of a managed AI process, showing how you move from prompt creation to securing parameters and keeping an eye on costs.

This structured approach makes it clear: managing your prompts, securing data access, and tracking costs aren't separate tasks. They're interconnected steps essential for building an AI capability that can actually scale.

A successful AI project is 10% algorithm and 90% implementation. The mechanics of how you connect, manage, and monitor the AI will define its long-term value far more than the specific model you choose.

By starting with a plan to manage these critical components, you set the stage for a practical and scalable journey. This guide is your playbook for doing just that, giving you the right mindset and tools to move past the hype and achieve tangible results.

Pinpoint Your First High-Impact AI Project

Before a single line of code is written, you need to take a candid look inward. It’s tempting to chase the big, flashy "moonshot" AI projects, but the most successful implementations always start with a focused, realistic goal. This is the moment you shift from daydreaming about what AI can do to defining exactly what AI will do for you.

The first step is a frank assessment of your business's AI readiness. This isn’t about having a team of data scientists on standby; it's about looking at your foundational capabilities. You need to evaluate your current state across three core pillars: data, technology, and talent.

Evaluate Your AI Readiness

Getting a clear picture of your starting point is crucial. It stops you from picking a project your business simply isn't equipped to handle. Ask yourself these practical questions:

- Data Maturity: Is your data clean, organized, and accessible? AI models are only as good as the data they're fed. If your information is siloed in a dozen different systems or full of errors, your first project will stall before it even starts.

- Technical Infrastructure: Can your existing software and servers handle API integrations and the increased workloads AI might bring? Having a modern, flexible architecture is a massive advantage when figuring out how to implement AI in your business.

- Team Skills: Do you have people with the technical know-how to manage an AI project? If not, are you prepared to partner with an external engineering firm to fill that gap?

An honest "no" to any of these isn't a dead end. It’s a signpost showing you exactly where to focus your prep work. For a deeper dive into this, check out our guide on leveraging machine learning for businesses.

Find the Sweet Spot for Your First Project

Once you know what you’re working with, you can hunt for that perfect first use case. The goal is to find the sweet spot where high business impact meets low implementation complexity. You have to resist the urge to solve every problem at once.

Think of it like this: an e-commerce brand could try to build a massive, complex supply chain forecasting model. Or, they could start with a product recommendation engine on their website. The recommendation engine is faster to implement, way easier to measure, and can deliver a tangible lift in average order value almost immediately.

Your first AI project should be a "quick win." It needs to be visible, measurable, and impactful enough to build momentum and secure buy-in for future, more ambitious initiatives.

The data backs up this strategy. By 2026, AI adoption will have hit critical mass, with 83% of companies calling it a top business priority. This boom promises huge productivity gains everywhere from e-commerce to fintech. But implementation often breaks down without a clear plan; 51% of leaders point to high costs as their top barrier. This just highlights the need for a focused start.

Prioritize and Plan

To lock in your choice, map out potential projects on a simple matrix. Label one axis "Business Impact" (low to high) and the other "Implementation Complexity" (low to high). The perfect first project sits right in that high-impact, low-complexity quadrant.

For example, a fintech app might prioritize an AI-powered fraud detection alert system over building a full-scale algorithmic trading bot. The fraud system solves an immediate, costly problem without requiring a complete architectural overhaul.

By starting with a focused, realistic, and high-impact project, you're not just plugging in technology. You're building a foundation for sustainable, long-term AI success. This strategic first step is the most important one you'll take on your journey.

Choose Your AI Model and Design the Integration

Now that you've identified a high-impact project, it’s time to decide how you’ll actually bring it to life. This is a critical fork in the road where you pick the core technology and sketch out the technical blueprint. Your decision really comes down to two main paths: using a pre-built model or building your own from the ground up.

For most businesses, especially if this is your first real AI project, tapping into powerful, pre-trained models from providers like OpenAI, Google, or Anthropic is the smartest play.

These "off-the-shelf" models are ready to go via simple APIs and give you a massive head start. They've already been trained on colossal datasets, which means you get to use their advanced capabilities without needing a team of machine learning PhDs or mountains of your own data.

The alternative—building a custom model—is a huge undertaking. It demands deep expertise, massive and perfectly labeled datasets, and a serious investment in time and money. While it gives you complete control, it’s usually only necessary for highly specialized use cases where existing models just don't cut it.

Off-the-Shelf vs. Custom AI Models A Quick Comparison

The choice between a pre-built model and a custom one has big ripple effects, influencing everything from your budget to your launch date. Getting a clear view of the trade-offs is crucial. This table should help clarify things.

| Factor | Off-the-Shelf Models (e.g., GPT-4, Gemini) | Custom-Built Models |

|---|---|---|

| Speed to Market | Fast. Integration can happen in weeks, allowing for rapid prototyping and deployment. | Slow. Development can take many months or even years from concept to production. |

| Initial Cost | Low. You avoid massive R&D expenses and pay for usage via API calls. | Very High. Requires significant investment in talent, data infrastructure, and computing power. |

| Data Needs | Minimal. These models are pre-trained; you only need data specific to your prompts. | Massive. Requires vast amounts of high-quality, labeled data for effective training. |

| Customization | Limited. You can fine-tune and guide the model with prompts but can't alter its core architecture. | Total. The model is built from the ground up to solve your specific, unique problem. |

| Maintenance | Low. The provider handles model updates, security, and infrastructure maintenance. | High. You are responsible for ongoing monitoring, retraining, and infrastructure management. |

As you can see, the speed and cost-effectiveness of pre-built models make them the clear winner for most businesses getting started. If you want to dive deeper into the fundamentals, you can explore our overview of knowledge in artificial intelligence.

Designing the Technical Integration

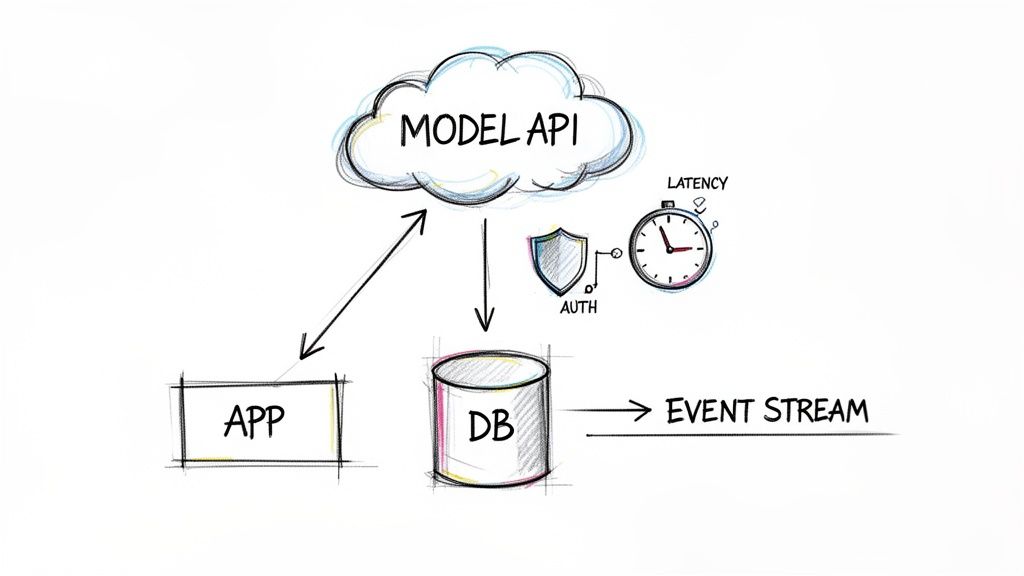

Once you’ve settled on a model, the conversation shifts to architecture. How will this AI engine actually plug into your existing software? This isn’t just a technical footnote; it’s a strategic choice that dictates how reliable, responsive, and scalable your new AI feature will be.

A common starting point is a simple API call. When a user does something in your app—say, clicks a "summarize" button—your software just sends a request to the AI model's API and shows the response. This approach is perfect for straightforward, on-demand tasks.

But for more complex, real-time needs, an event-driven architecture is often a much better fit. Think about a fraud detection system. You don't want to wait for someone to click a button. Instead, a new transaction event should automatically trigger the AI model to analyze the data and raise an alert if something looks fishy.

Your integration design must plan for scale from day one. A pattern that works for ten beta users will crumble under the weight of ten thousand active customers. Designing for future growth is non-negotiable.

This is where having an experienced engineering partner is invaluable. They can build a solution that not only works today but is also engineered to handle growing user loads and data volumes without breaking a sweat. It's about building your AI initiative to last.

Build, Test, and Deploy with a Modern Mindset

Okay, this is where your strategy starts to feel real. The build phase is all about the hands-on work: plugging your application into the AI model you chose, creating a user experience that doesn't feel clunky, and making sure data moves smoothly and securely. It’s a super dynamic process that just doesn't work with old-school development methods.

For AI projects, those rigid, traditional development cycles are a recipe for disaster. The ground is always shifting as you figure out what the model is actually good at versus what it struggles with. This is exactly why agile development methods are a perfect match. They give your team the freedom to build, test, and tweak features in short sprints instead of holding your breath for one massive, high-stakes launch.

Embrace MLOps for a Reliable, Scalable System

A huge part of this stage has to be MLOps (Machine Learning Operations). If you know DevOps, this will feel familiar—it’s the same idea but built specifically for the unique headaches of machine learning. MLOps is really a set of best practices for getting AI models into a live production environment and keeping them there without everything falling apart.

Putting MLOps into practice means focusing on a few key things:

- CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines are your best friend for automating how you test and push out new code. For AI, this also means automating tests for your model's performance, not just your app's code.

- Automated Testing: Your testing has to go deeper than just looking for bugs. It needs to check the AI’s responses for accuracy, relevance, and any weird biases to make sure the user experience stays top-notch.

- Robust Monitoring: This is the big one, and it's the piece everyone forgets. Once your feature is live, you absolutely must have eyes on its performance at all times.

Why Monitoring Is a Deal-Breaker

Launch day isn't the finish line; it’s the starting gun. Without solid monitoring, you're flying blind. You need live dashboards and alerts that track a few critical metrics.

For instance, a sudden spike in latency (the time it takes for the AI to answer) could point to an issue with the model provider or your own integration, which quickly leads to frustrated users. In the same way, watching your token consumption is non-negotiable for keeping costs in check. The last thing you want is a surprise six-figure bill because an inefficient prompt is burning through more resources than you planned.

Successful AI isn’t a "set it and forget it" technology. It requires continuous monitoring, maintenance, and tuning to deliver lasting value and a positive return on investment.

This operational discipline is what separates a successful AI project from an expensive experiment gone wrong. It’s a fundamental part of how to implement AI in business effectively, making sure your solution performs well and stays cost-effective for the long haul.

From Pilot to Production: The Maturity Leap

Making the move from small-scale experiments to production-ready systems is part of a bigger industry trend. According to Deloitte's State of AI in the Enterprise report, 34% of organizations are now using AI for deep business transformation—think brand new products or completely reinvented processes. This is a huge leap, and it's essential for anyone building compliant healthcare products or launching content-heavy media apps. The real strategic wins come from this kind of deep integration, not just skimming the surface for efficiency gains.

This disciplined approach to building, testing, and deploying is what ensures you don't just launch a feature, but create a sustainable asset that keeps generating value. For more on this, you can learn how to leverage artificial intelligence in our related guide. A modern mindset is what turns a cool AI concept into a powerful business tool that's built to last.

Scale Your AI Initiative and Optimize Performance

Getting your AI feature live is a huge milestone, but it's really just the beginning. The mission now shifts from building to scaling and optimizing. Launching the tech is step one; unlocking its long-term value requires a real focus on both your people and the system's performance. This is how you turn a single project into a core, value-generating asset that grows right alongside your business.

This phase is all about smart change management to get people on board. It’s not enough for the technology to work—your teams and customers have to actually use it and embrace it. You can't just flip a switch and expect everyone to fall in line.

Drive Adoption Through People

Success here really comes down to education and clear communication. Your employees need to understand how the new AI tool helps them do their jobs better, not just adds another complicated step to their workflow.

Think about running hands-on training workshops tailored to different departments. A session for the marketing team playing with an AI content generator will be completely different from one for the customer service team using an AI-powered ticket sorter.

Communicating the benefits to your end-users is just as critical. Use in-app notifications, email newsletters, or short video tutorials to show how the new feature solves a real problem for them. Frame it around the value they get, like "Get personalized recommendations instantly" instead of "We've launched a new AI engine."

Unlock Long-Term Value with Continuous Optimization

On the technical side, continuous optimization is where the real magic happens. The AI landscape is always changing, with new models and techniques popping up constantly. Your system needs the flexibility to adapt without needing a complete tear-down and rebuild.

This is where a powerful management tool becomes your best friend. Imagine trying to fine-tune your AI's responses by digging into the application's source code every single time. It’s slow, it’s risky, and it’s wildly inefficient.

This is precisely the problem the Wonderment Apps prompt management system was built to solve. It gives you a central hub to manage, version, and optimize all your AI interactions, completely separate from your codebase.

Your ability to quickly test and refine AI prompts without deploying new code is a massive competitive advantage. It allows you to adapt to user feedback and improve performance in hours, not weeks.

This kind of agility is becoming non-negotiable as more businesses weave AI into their core operations. In 2025, a staggering 57% of U.S. small and mid-sized businesses (SMBs) are investing in AI technology. That’s a dramatic 58% rise from just 36% in 2023. This surge shows AI is no longer an experiment—it's essential infrastructure. You can find more insights in business.com's second annual AI adoption survey.

The Power of a Centralized Management System

Our administrative tool is designed for developers and entrepreneurs who need to modernize their existing software for AI. It's not a standalone product; it's an engine you plug into your app to give you complete control. This robust system offers several critical functions that make scaling and optimization practical:

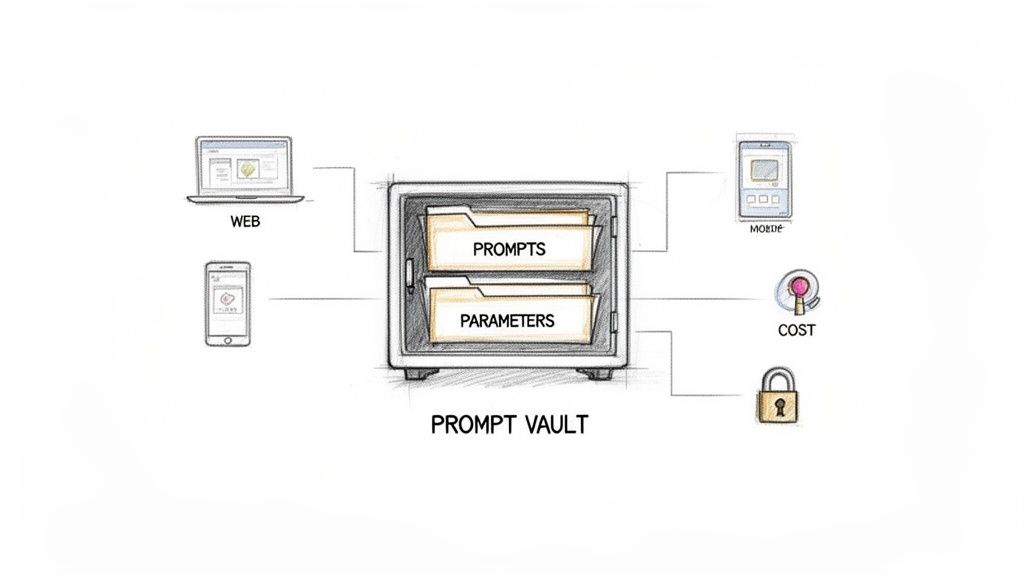

- Prompt Vault with Versioning: This lets you tweak, test, and instantly roll back to previous versions of your prompts. If a new prompt isn't performing well, you can switch back to a proven one with a single click. No drama, no emergency code pushes.

- Parameter Manager: This gives you secure and controlled access to your internal databases. You can define exactly what data the AI can see and use, ensuring sensitive information stays protected while giving the AI the context it needs to be useful.

- Unified Logging System: When you're connecting to multiple AI models, troubleshooting can be a nightmare without a single source of truth. A unified log gives you a clear view of every request and response across all integrated AIs, making it easy to spot and fix issues fast.

- Real-Time Cost Manager: This might be the most important piece. The cost manager gives you a live dashboard of your cumulative spend. This prevents those nasty budget surprises and allows you to tie specific AI functions directly to their operational cost, enabling precise and undeniable ROI calculations.

By investing in the right management tools and focusing on both human adoption and technical refinement, you can successfully scale your AI initiative. This is how you transform a promising pilot project into a lasting strategic advantage that truly propels your business forward.

Answering Your AI Implementation Questions

As you start mapping out your AI journey, you’re bound to have questions. We see it all the time. Knowing how to implement AI in your business isn’t just about the tech—it's about getting straight answers to the practical, tough concerns that every leader faces.

Let's cut through the noise and tackle the real-world questions we hear most often from entrepreneurs and executives. These are the details that take a project from a whiteboard concept to a tool that actually generates value.

What's a Realistic Cost to Implement AI in a Small Business?

This is the big one, and the answer is: it varies wildly, but it doesn't have to break the bank.

For most businesses, the most sensible starting point is leveraging a pre-built AI model through an API. In this scenario, your costs are directly tied to usage—often measured in "tokens," which are basically pieces of words—plus the initial development work to hook it into your existing software.

A simple integration might run a few thousand dollars, while a more complex feature embedded deep in your application could climb into the tens of thousands.

The other end of the spectrum? Building a custom AI model from the ground up. This is a massive financial commitment, easily running into six figures or more. It requires specialized talent and enormous computing resources, so it's not a decision to be taken lightly.

A critical cost that often gets overlooked is ongoing management. Without the right tools, API spending can spiral out of control. That’s why we built a cost manager right into the Wonderment Apps administrative toolkit—it's essential for keeping your operational costs predictable and proving a clear ROI.

Should I Hire an AI Agency or Build an In-House Team?

This really boils down to your long-term strategy versus your immediate needs.

If AI is set to become a core, differentiating part of your business—and you have a pipeline of projects lined up—then building an in-house team makes strategic sense. Just be prepared for a significant, sustained investment in highly specialized (and very expensive) talent.

For most companies just starting their first or second AI project, partnering with a specialized engineering firm like Wonderment Apps is faster, more efficient, and far less risky. An expert partner comes in with battle-tested best practices from day one, which dramatically accelerates your time-to-market. It also frees up your internal team to focus on what they do best, instead of getting bogged down in a steep learning curve.

What Are the Biggest Mistakes to Avoid?

We see a few common, costly mistakes trip people up again and again.

The number one pitfall is starting with the technology instead of a clearly defined business problem. This path almost always leads to an expensive, impressive-sounding project that delivers zero tangible business impact. You're left with a solution desperately looking for a problem to solve.

Another classic mistake is underestimating the need for clean, high-quality data. An AI model is only as good as the data it's trained on. If your data is a mess, your results will be, too. It’s that simple.

Finally, so many businesses neglect the user experience and completely forget to monitor performance and costs after launch. A successful implementation requires a holistic view that perfectly balances the tech, your business strategy, and the needs of your actual users.

How Can I Measure the ROI of an AI Implementation?

You can't. Not unless you define clear, quantifiable metrics before you write a single line of code.

These key performance indicators (KPIs) must tie directly back to the business problem you set out to solve in the first place.

Here are a couple of real-world scenarios:

- For an AI-powered customer support bot: You’d track direct cost savings from the reduction in human agent hours. You’d also look for an increase in first-contact resolution rates and maybe even revenue gains from higher customer satisfaction scores.

- For a product recommendation engine: You should be measuring the direct lift in average order value (AOV), the increase in conversion rates for recommended products, and the long-term impact on customer lifetime value (CLV).

It is absolutely crucial to establish a baseline for these metrics before you begin. That's the only way you can track them consistently and prove, with hard numbers, the financial impact your AI investment is making.

Ready to modernize your software and build an AI-powered application that scales? The team at Wonderment Apps can help. We provide the engineering expertise and a powerful prompt management system to ensure your AI initiative is a success.